As AI becomes increasingly prevalent in society, concerns about transparency are becoming more pressing. While AI operates in the realm of numbers, we hope to be able to communicate with it in a way that is more similar to how we communicate with each other, using words and concepts. We want to be able to apply ideas like “truth” and “deception” to them. We want the artificial neural networks (ANNs) to act like the natural neural networks (NNNs) inside our skulls. But there’s a problem: natural neural networks are full of shit.

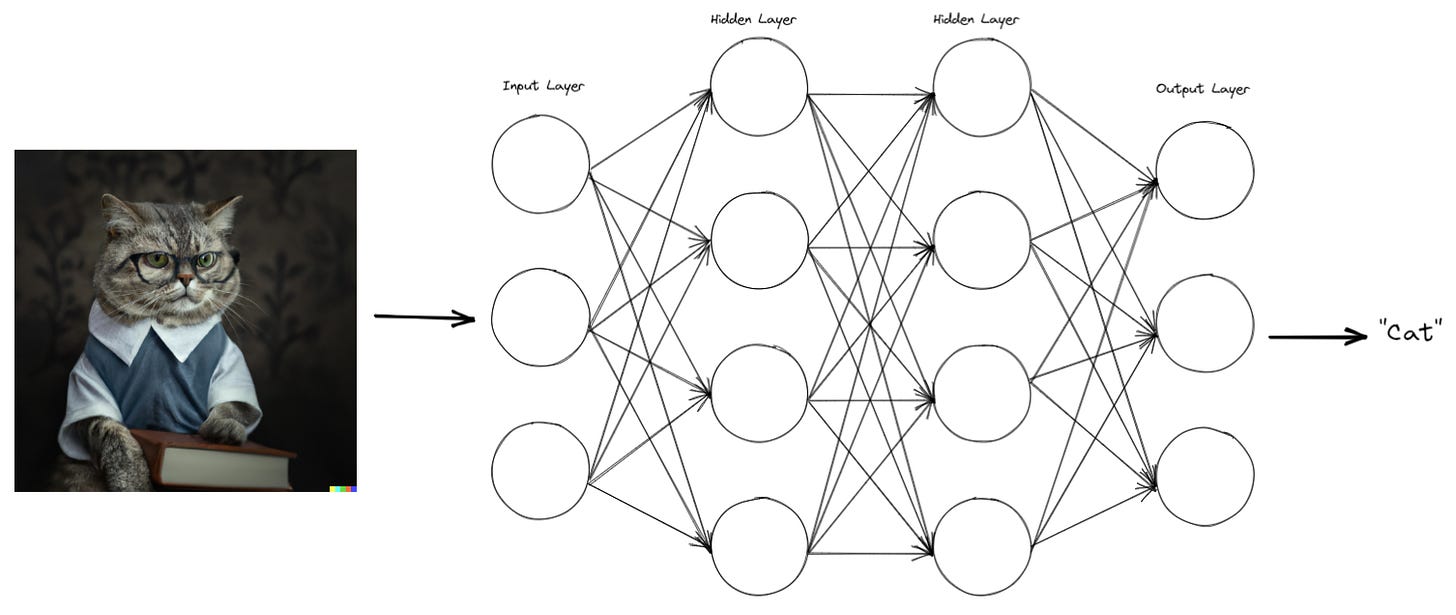

Let’s start by clearing up some misconceptions. People often think that ANNs are black boxes. But artificial neural networks are, in fact, entirely transparent. Every single connection between neurons in an ANN can be scrutinized, including the so-called “hidden” layers. There’s nothing secret inside an ANN. When an image is fed into an ANN, it’s possible to trace the flow of information from the input image to the output label. The process is entirely deterministic and transparent. Even the training is transparent and understandable, and could be deterministic if we wanted (we intentionally prefer it to be stochastic). The term “black box” is used because humans can’t understand the information, not because the information within the ANN is hidden.

When we say that ANNs “must be transparent”, we should be saying they “must be explainable to humans,” as that’s the real goal. This is a very different task than being transparent. We want to understand how an ANN arrived at a particular decision or prediction. Watching a series of numbers transform through matrix multiplications isn’t a human’s idea of transparency. We need it to point to a picture and say, “See those cute cat ears, those cat reading glasses, and the distinguished starched collar you couldn’t catch a dog wearing? That’s why I think it’s a cat.” This seems reasonable. Humans can provide comprehensible explanations, why can’t an AI? But this is a false premise. When you probe human explanations, you reveal cracks and gaps in their explanations.

Consider the trolley problem: if a trolley is barreling down the tracks toward five people, would you pull a lever to divert it onto another track with only one person on it?

Most people say “yes”. When asked why, their answers are something like “because it’s better for one person to die than five.” OK, that makes sense. An NNN gave a reasonable explanation, and we have no reason to doubt it. (Next stop: reason to doubt it.)

Let’s say that we have the same trolley, but there is no alternative track. You’re on a bridge overlooking the tracks and there’s nothing you can do to stop the trolley. Except… there’s a very large man next to you leaning precariously over the tracks. One firm push would throw off his balance, wherein he would fall onto the tracks, which, based on your extensive experience as a train derailment engineer, you calculate for certain would derail the train. Would you push him?

All of a sudden, “It’s better for one person to die than five” doesn’t feel like such a great explanation. It turns out that the justification you provided isn’t the full story. You thought you knew why you would act a certain way, but on closer inspection, your justification is incomplete. We often provide justifications that feel ironclad but quickly fall apart upon closer inspection.

The problem goes much further than philosophical musings. Research on choice blindness, where people are unaware that their choices have been secretly altered (paper here; summary here; though I recommend the 3-minute video here), highlights this as well. Participants were asked to choose which of two faces they found more attractive. Then they were handed the picture of the one they selected and asked to justify their decision. The trick was that sometimes the pictures were swapped, and they were looking at the picture they didn’t choose. The majority of participants never picked up on the trick. Despite the swap, despite looking at the picture they didn’t choose, they were still able to justify why they “chose” it.

This is rationalization, plain and simple. This is what natural neural networks do—they are rationalization machines. They receive your actions as input and output a tidy narrative about why it all makes sense. Sometimes the story is true and complete; sometimes it isn’t.

The same phenomenon, which we might dub the “natural neural networks are full of shit” phenomenon, is apparent in other fields. A study where researchers used transcranial magnetic stimulation (TMS) to control participant motions provides another example. The experimental setup was as follows: participants were asked to watch a screen and, upon receiving a cue, decide whether to twitch their left or right hand. The researchers could use an fMRI to determine which hand the participant had selected by examining activity in the corresponding motor cortex. A few seconds later, a second cue would appear, and they would make the movement they had decided on (i.e. twitch their right or left hand). But sometimes a trick was played:

For a few key trials in this experiment, however, when the signal to move was given, [the researcher] would deliver a jolt to the motor cortex that the participant had not selected. This caused the wrong hand to move.

Now, if our conscious experience of decisions is in control, this should feel strange – really strange. You’ve decided to do one thing, but your body has been hijacked and made to do something else.

The strangest thing about the results of this study is that the participants didn’t even seem to notice that their brains had been hijacked.

Most participants had a very simple description of the experience. When asked why they moved their left hand, when their brain activity made it seem like they were going to move their right hand, most participants gave a nonchalant answer: “I just changed my mind.”

People can rationalize their actions even when someone else controls those actions. Natural neural networks are master confabulators.

More evidence that NNNs cannot be trusted can be found throughout psychological literature. We have to be careful here because much of psychology research doesn’t stand up to replication. However, one seminal paper on false memories was retested and the new study, with a larger sample size, pre-registered analysis, and improved methodology, confirmed the effect. People really do create false memories; they can believe and even testify to events that never happened. This phenomenon is probably partially behind the Satanic panic of the 1980s.

This all reminds me of the “hungry judge effect”. A study of Israeli judges found that they were more likely to grant parole to prisoners if they had just eaten, suggesting that hunger significantly influences decision-making. As amusing as the study is, I’m not convinced by the methodology and never heard of it being replicated, so I doubt it’s a real effect, at least at the magnitude described in the study. The whole thing depends on several unstated assumptions, such as assuming that the type of case and time of day are independent. A separate study followed up and interviewed people from the Israeli Prison Service and learned that “case ordering is not random”. They found that groups of cases were done in a single session, and “within each session, unrepresented prisoners usually go last and are less likely to be granted parole than prisoners with attorneys.”

Although the original study shouldn’t be considered as evidence, per se, I still think it’s interesting. I think part of the reason the study got so much attention (other than the shocking result), is that it hints at something we all know is true. There are socially acceptable and socially unacceptable justifications for actions. A judge who wants to keep their job would be unwise to say, “I denied parole because it’s the quicker option, and I was getting hungry.” Likewise, if you ask a doctor why they scheduled a C-section, the answer is some combination of “for the health of the mother” and “for the health of the child”. This, despite the fact that doctors make more from C-sections and there is considerable evidence that they are influenced by financial incentives (articles from Economist and NPR; academic papers here, here, and here). This isn’t particular to experts; this is a human thing. I’ve never met someone who said they voted for a candidate because they were tall, but isn’t it quite the statistical anomaly that we haven’t had a short president in over 100 years?

Many more examples of NNN failures were researched by Amos Tversky and Daniel Kahneman and are detailed in Kahneman’s book Thinking, Fast and Slow (I have to throw in another warning: it’s a decidedly “pre-replication crisis” book. Some of it doesn’t stand up to scrutiny, but I think the general concepts are still valid).

What does this all mean? It doesn’t mean understanding between ANNs and NNNs is impossible. As we saw in the overview of AI explainability, there are a variety of ways to do this. But this does mean there’s a limit.

We have an exaggerated notion of NNN transparency and a dismissive one of ANN transparency. Again, the true reason for any ANN decision is fully transparent – follow the input through the network and you get the full story. But that story isn’t understandable by humans, and any approach we take will be an approximation of the complete answer. The translation from ANN transparency to NNN transparency will always be lossy because of the limitations on the NNN side.

The key point here is that there’s a limit to what we call transparency. There is no reason to believe that perfect transparency exists for NNNs and good reason to believe it doesn’t. There might not always be a right answer to the question of “why?” There is probably no definitive answer to “What is the right thing to do?” We rationalize our way through the world and make things work out somehow. The fact that NNNs are the result of evolution rather than clear design is evident. This is bad news for ANN transparency research, as no amount of focus on the bow is going to allow it to hit a target that doesn’t exist.