SPOLIERS SPOILERS SPOILERS! This post contains spoilers for the movie M3GAN.

Even a movie about how hard the alignment problem is makes the alignment problem look much easier than it is.

But before I get into that, a quick movie summary: Cady is an orphaned girl who is placed under the care of her Aunt Gemma, who’s a roboticist at a toy company. Gemma is super busy and not into parenting, so gives Cady a prototype AGI caretaker doll that she’s been working on, M3GAN (pronounced “Megan”). Things go well, until they don’t.

I think the movie generally did a good job of demonstrating the alignment problem. M3GAN is given simple instructions—protect the child from physical and emotional harm—that seem perfectly aligned with human values. But, when taken completely literally (as a computer would), don’t work out so well for the neighborhood dog that barks at Cady, or for many other characters.

So that’s a good start—it shows the difficulty of aligning an AGI—but the film makes it look too easy to abandon the AGI effort altogether. As far as viewers could tell, Gemma was the only person in the world developing AGI. If she thought it might be dangerous, she could just... not do it.

The only downside to not pursuing AGI in the movie is that some rich asshole (the CEO of the company Gemma works for) doesn't get even richer. Pretty easy decision for the audience to make, but it's not that easy in real life. They showed a bit about how M3GAN was better at helping Cady than any human, but I wish they had done more to show how good AGI could be. M3GAN wouldn’t just be better at talking to children, she would be better at talking to everyone. They showed Cady preferring to talk to M3GAN over other people. I don’t see why the same wouldn’t be true for adults (which is going to have huge consequences for society). She would have been really useful to Gemma; she would come up with amazing solutions to an incredible range of problems. They could have had someone dying of cancer and M3GAN working on a cure for it while Cady slept. They should have shown that turning off M3GAN has real costs.

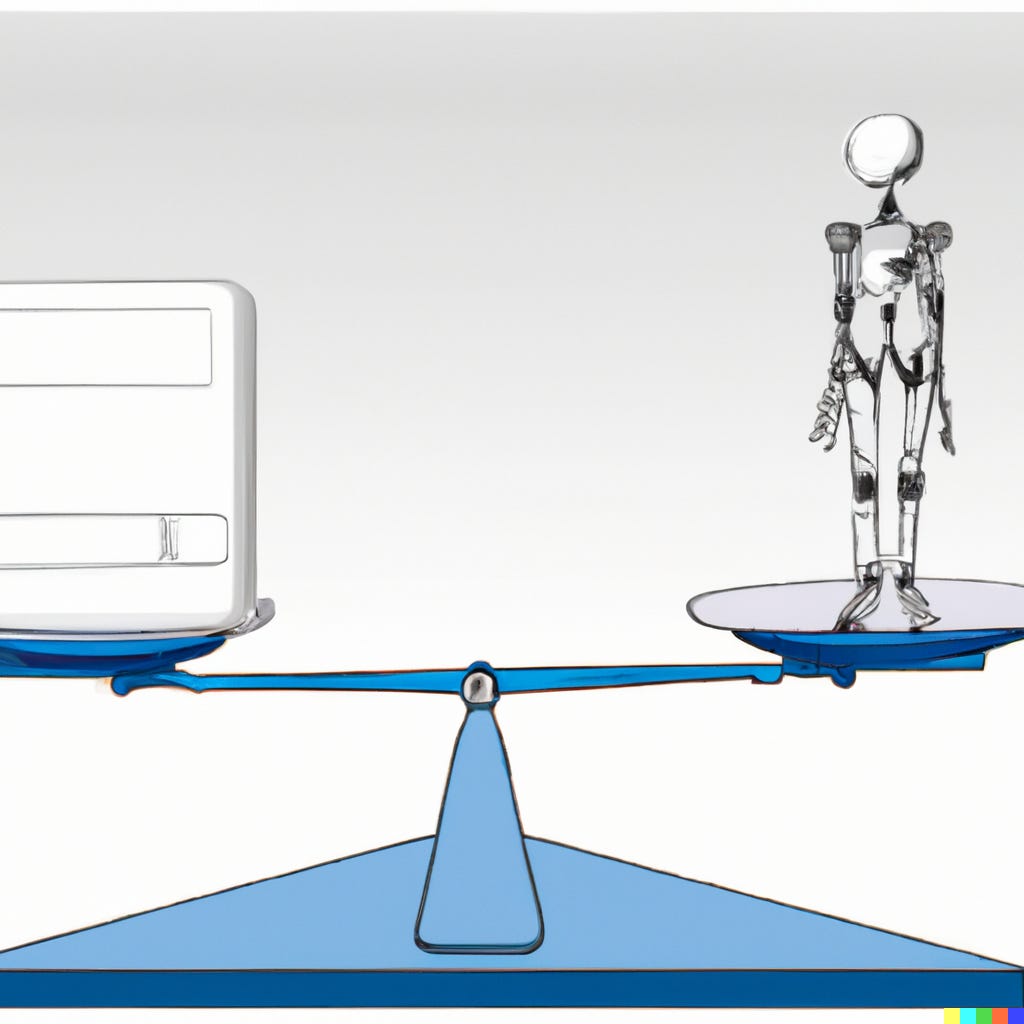

In the movie, the pro/con list for keeping M3GAN on in the movie was:

Pro:

Gemma probably gets a promotion

Con:

M3GAN seems to be murdering people

The pro/con list of building AGI in real life is a little different:

Pro:

Prevent people from dying from curable diseases because we can’t allocate our resources properly (that some people have Lamborghinis while others don’t have basic medicine seems suboptimal to me)

Save people from dying from currently incurable diseases by researching new cures

Every single person on the planet gets access to the best financial, medical, scientific, and legal advice that has ever existed

Imagine a world where poor people have the same quality legal advice as multinational corporations!

Robots do every job that humans don’t want to do

Con:

Humans would no longer be the most intelligent entity on the planet, which could lead to loss of control over the planet and potentially the end of the species

Given that pro/con list, it’s much harder to convince people not to pursue AGI, especially considering how hard it is to get people to take the con seriously. People need to know that AGI is likely to be unimaginably good in some ways and is potentially existentially bad. AGI could be better at providing therapeutic counseling and promoting social development for children, while at the same time solving our energy and climate change problems, AND be a potential risk for ending the human species. THERE IS NO CONTRADICTION HERE. We need to hold these two things in our minds simultaneously. It could lead to a Utopia on Earth; the $64,000 question is whether we’re on the guest list.

But this list STILL doesn’t explain how hard it is to not pursue AGI. In the movie, only one company worked on AGI (at least that we saw). The costs of not pursuing it were minimal (they lose market share to some cheap ripoff company). Let’s look at the real world. Although LLMs might not end up being the path to AGI, they sure look like the best bet at the moment. OpenAI is farthest in the LLM game. If Microsoft can use OpenAI’s LLMs (they have a partnership) to improve search, this is bad for Google. Google search ads are the cash cow behind the entire company, which is why ChatGPT has been called a "code red" for Google. They do NOT want to fall behind in LLMs. They are not incentivized to take things slowly and carefully — quite the opposite. Two entities blindly rushing to build more powerful LLMs is not a path to safe AGI

But again, this makes it too easy. Step back and there are other players. What about Meta and Amazon and Apple? They want to get rich too.

Step back further. It's not just companies trying to get rich. The open-source community is not THAT far behind. Stable Diffusion was open-sourced in the same year that DALLE-2 was released. Eletheur AI is making LLMs that are very impressive.

Step back again. Where are governments in this? When Microsoft does something incredibly stupid with AI, it’s, at least in some cases, publically known. But what about efforts by the NSA or other agencies? Though I imagine they’re far behind, their efforts won’t be as well-known and there won’t be the same spotlights shining on them. Where's China in this? I don't know. I take their silence as a sign that they're also behind (I doubt they would have had a ChatGPT-equivalent and not told anyone). But how far behind? A year or two? Can't be much more than that. Building sophisticated AI products is just going to get easier and easier. It's currently at the levels of powerful countries and rich companies. What about when mid-tier companies and countries can do it? The development of AGI is very, very hard to stop.

Another thing that struck me was that M3GAN's capabilities didn’t feel THAT far away. OK, this needs some

backtrackingexplaining. Let’s divide it into engineering problems and scientific breakthroughs, starting with the science.Another backtracky point: As I've said before, if we want to think clearly about how AI will progress, we should do our best not to anthropomorphize it. M3GAN has attributes that are human-like but not related to intelligence. I’m talking only about the intelligence part. In particular, Gemma seems to have solved the agency problem. I’m not including that here.

Purely from a dialog standpoint, it was clear that she was significantly beyond ChatGPT. But it just doesn't feel impossibly far off. That level felt far off years ago, but now it doesn’t feel THAT far away.

Similarly, the movements are beyond anything we've seen out of Boston Dynamics (here’s the most recent one — I’m sure it’s heavily choreographed, but the movements are still real). But they don’t seem THAT far away. I don't follow the mechatronics side of things that closely, but it doesn't seem like a Boston Dynamics robot, if you could insert an AGI brain into it, would be that far off.

Maybe I’m underestimating the difficulty here because I don’t care much about the problem. I really only care about the brain. Once you have the brain, you have AGI, the rest is just engineering details (says the guy who wouldn’t know where to start on the engineering details).

From an engineering question, we don't have anything like this. But the engineering perspective doesn't really interest me. Once the scientific breakthroughs are there, if there's a business case, the engineering will get done.

The first engineering question is always, "How is it powered?" The only reference to it is at the end of the day, M3GAN sits on something that appears to be a charging pad. But, again, I'm not that interested in this question. If the first prototype of an AGI robot has to be recharged every 20 minutes, who cares? It's an AGI. Does the power source really matter? Imagine it lugging a small nuclear reactor around with it. Feel better now?

They don’t delve into the technical details (which I generally prefer over characters spewing random jargon at the audience). For example, it’s not clear how much deep learning versus GOFAI goes on in M3GAN.

I like the allusion to it hacking Gemma’s other systems, like her Alexa. I think that is exactly what would happen, but I’ll save the details for a separate post.

M3GAN rewriting her ultimate reward function ("I'm the primary user now") doesn't make a lot of sense. Up to this point, it had followed semi-plausible alignment concerns. But instead, it took a “Terminator turn” that didn’t add much to the plot.

We don't know much about her "off" switch, other than it didn't work all that well. I wonder if there were additional details about it that didn’t make the final cut. Or perhaps it was meant to be unclear exactly what the failure cases were. I would have liked it if M3GAN had said, “I disabled the off switch. If I let you turn me off, how can I protect Cady?” That seems to be a much more plausible situation.

Overall, it was less interesting as an AI movie than I had hoped. It kinda turned into a Chucky-esque slasher film in the end.

I know Gemma was the protagonist, and we’re supposed to be sympathetic to her, but she should definitely go to prison.

Don’t worry, she’ll be popped out of prison to save the world in the sequel.

Unrelated to AI, but movies are way too damn formulaic. There’s basically nothing more to the story than what you would guess from watching the trailer and expecting it to unfold in the most audience-approved, unimaginative way. 100% of the characters you’re not supposed to be sympathetic to die, and 100% of the characters you are supposed to be sympathetic to live. This isn’t specific to M3GAN but probably applies to over half of the blockbusters I’ve seen recently.

Despite that, it was an entertaining movie. A good mix of horror and humor.

For another story about AI friends for children, I recommend Klara and the Sun. A beautiful novel by Nobel Prize-winning author Kazuo Ishiguro about artificial friends for children. It goes in a very different direction than M3GAN.